A new approach to focus groups

Most social researchers have run focus groups, and maybe come across some of the challenges of these, like:

- scheduling participants

- how long transcription can take

- not being able to feed new findings into past focus groups

- the interviewer effect of being visible to the group

For an evaluation of new e-learning courses, Acas used a new approach to focus groups to overcome these and get good quality feedback on our courses: an online community.

Acas is the Advisory, Conciliation and Arbitration Service, an independent, government funded public body, working with employers and employees to improve workplace relationships. This is done through multiple services, including a helpline, and conflict resolution services, as well as training courses. E-learning is part of the training offer – these are short, self-directed online trainings.

An online community is a bit like an asynchronous focus group

We commissioned YouGov to evaluate our 4 new e-learning courses on workplace topics like equality and diversity, and employment contracts. We anticipated mixed methods, with an online survey and some remote focus groups or interviews.

We discussed different options for how YouGov would deliver the evaluation, and agreed on a different method: an online community, in which around 30 participants would be asked discussion questions every day for a week about the courses they’d tried out. Themes included feedback on the sign-up process and course navigation; reasons behind what they said in the initial survey; and feedback on our end of course survey.

Here’s an example of a day’s questions:

Thinking about your experience of the e-learning courses (including elements such as content, design, interactivity, etc) please tell us:

1. What, if any, were the most valuable parts of the e-learning courses? Why? Please mention any of the things that stood out to you in any of the courses.

2. What, if any, were the least valuable parts of the e-learning courses? Why? Please mention any of the things that stood out to you in any of the courses.

3. What barriers or challenges, if any, did you experience during the e-learning courses? Please mention any of the things that stood out to you in any of the courses.

The free text questions in the online community look a bit like a typical qualitative survey – but with three key differences:

- respondents could see what others said, and agree with, challenge, or expand on those comments

- the community moderators (YouGov researchers) could respond to comments in real time, prompting for more detail or asking for clarity

- we (the commissioners) could see what people were saying, and ask the researchers to prompt, or ask new questions

Participants knew we, as the commissioners, could see what they were saying – but they couldn’t see us, so the “interviewer impact” was reduced, and we couldn’t talk to them directly, so our input was moderated.

The community includes multiple different ways of asking questions, and seeing the data

Below are a few examples of card sorting, word clouds, and heat maps. The heat maps in particular worked well for our project – we wanted to know what people thought of online courses and elements of a webpage. This way, the researchers could show them reminders of pages, and participants could click rather than having to describe something.

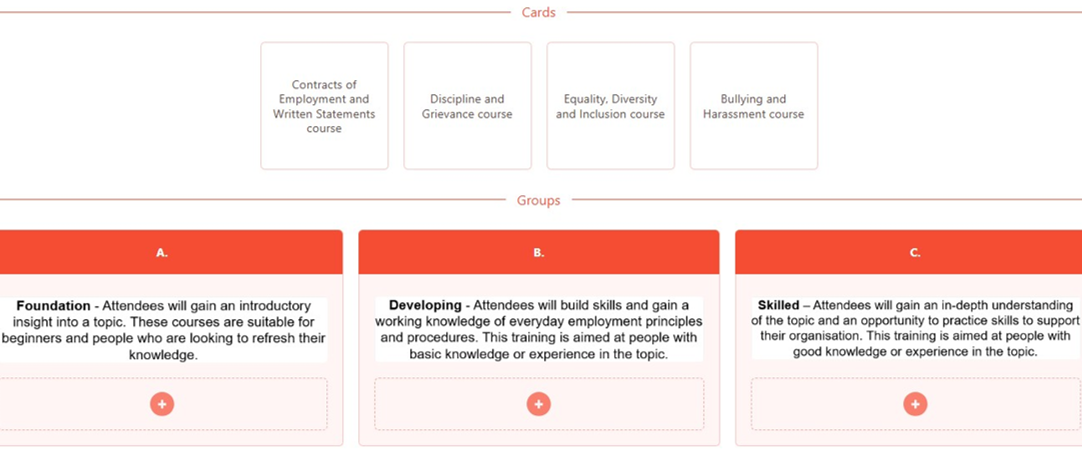

Card sorting:

The image of card sorting above shows the name of four training course titles under the label “Cards”. In this example the “cards” displayed are:

– Contracts of employment and written statements course

– Discipline and grievance course

– Equality, diversity and inclusion course

– Bullying and harassment course

Below this three categories are shown under the label “Groups”. Participants are asked to sort the cards into the three categories or “groups”, which in this example are:

– Foundation

– Developing

– Skilled

Word Cloud:

The image of a word cloud above shows the words entered by participants when asked to describe the e-learning modules. Some words in the cloud appear larger because more participants entered them, examples are “courses” and “information”. Some words in the cloud appear smaller because less participants entered them, examples are “contracts” and “topic”. The words in the word cloud are displayed in various different colours.

Heat map:

The image of a heat map above shows the “Welcome to Acas e-learning” web page. There are clusters of thumbs up and thumbs down icons across the page, placed by participants. Main clusters are seen in the following places:

– The menu bar on the top of the web page

– A list of “get started” hyperlinks

– A box titled “Course catalogue updates for 2025”

– A box titled “my courses”

The community solved some common problems with focus groups

Scheduling participation was much easier – people could respond to the questions at any point during the day, even if they were only free at 4am, or 10pm. No need to try to find a time that worked for everyone.

All the participants could talk to each other – rather than being split into smaller groups. It’s also possible to have a closed community, where participants can’t see what others say, which can work well for more sensitive topics.

There was no need for transcription as everything was shared in writing – though this can present its own challenges, discussed below. The community software (Recollective) does allow for recording and uploading videos, which would need transcription, and careful consent for sharing the person’s image.

Finally, we could feed new responses to the whole group – if someone raised something no-one else had, we could go back and ask people who’d already responded what they thought. With focus groups or interviews, that’s much harder once one session is over.

There are some challenges with the online community process

Because this was a new methodology, we had to get stakeholders on board. Their main concern was that the community wouldn’t work, and then we’d be out of time to try something else. YouGov really helped with these concerns, even giving us a demo of how the community worked so people understood it better. Combined with data on the success of previous similar projects, we were able to get permission to try out the online community.

It also doesn’t work well with people who aren’t comfortable online. Our research was about online learning, so this wasn’t as much of a concern, but it could definitely limit other projects. For future projects, we’d also have to consider whether participants would be put off by needing to write responses; recording videos isn’t necessarily a skill everyone would have as an alternative.

The other challenge was the time needed for set-up – because there were multiple different task types, some of them new to us, we needed more time to consider what questions to ask with which task type.

Top tips for using an online community for research

These come from YouGov as the supplier, stakeholders in Acas, and me as the project commissioner:

- Prepare your case to stakeholders for doing things differently to ensure adoption of the new method

- Expect setup to take longer

- Be creative in what activities you choose, based on what you want to get out of the research, and on how you want to analyse the data

- Lean on the expertise of the people running the community

- Get involved in looking at the responses once the community is live, and reflect on the responses as they come in

Find out more

You can watch a 2-minute demo on YouTube, or find out more on the Recollective website. And if you’d like to know more about Acas training – or take one of our e-learning courses – you can do so on the Acas website.

You can also email me (estarling@acas.org.uk) if you want to talk more about how the online community worked for us, or you’re thinking about trying it yourself.

Finally, you can read about the Analysis in Government Month event I presented in May 2025 about Qualitative research through an interactive community.